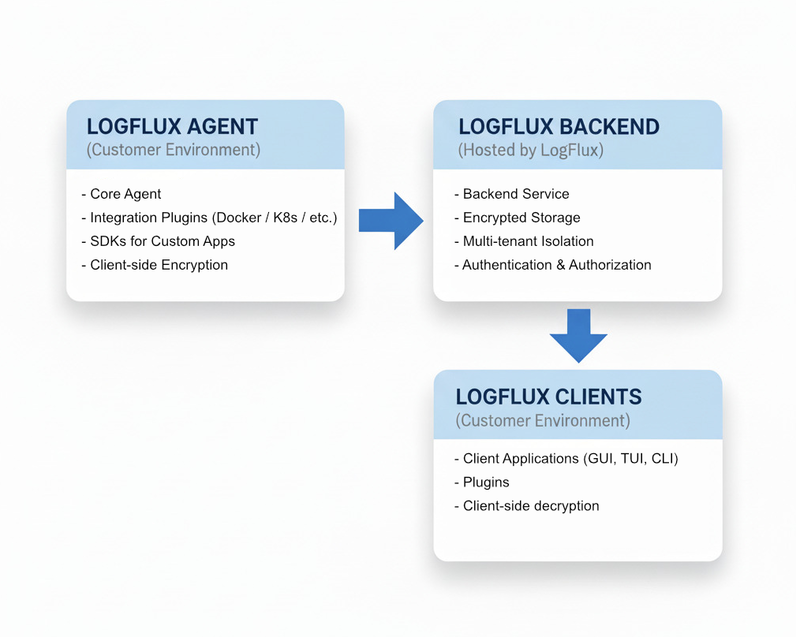

LogFlux is designed as a distributed log management system with three main components that work together to provide comprehensive log collection, storage, and analysis capabilities.

System Architecture

The LogFlux architecture consists of three primary components:

Component Overview

1. LogFlux Agent - Log Collection and Encryption Layer

The LogFlux Agent runs in your environment and is responsible for collecting logs from your applications and infrastructure, encrypting them locally, and sending them to the LogFlux Backend. It provides multiple integration options to suit different use cases:

Core Agent

- Lightweight Agent: Minimal resource footprint for production environments

- Client-side Encryption: All logs encrypted with AES-256-GCM before transmission

- Resilient Architecture: Built-in retry logic and local buffering

- Multi-tenant Support: Secure isolation between different applications

Integration Plugins

The agent supports various integration plugins for common infrastructure components:

- Docker: Collect logs from Docker containers

- Kubernetes: Native Kubernetes log collection

- Nginx: Web server access and error logs

- systemd: System service logs

- File-based: Monitor log files and directories

SDKs for Custom Applications

Native libraries for integrating LogFlux directly into your applications:

- Go SDK: Native Go integration with structured logging

- Python SDK: Python logging handlers and async support

- JavaScript SDK: Node.js and browser-compatible logging

- Java SDK: Enterprise Java logging integration

Key Features

- Zero-trust Architecture: Encryption happens on your systems before transmission

- Flexible Integration: Choose plugins, SDKs, or direct API integration

- Batching and Compression: Efficient log transmission

- Local Buffering: Continue logging during network outages

- Configurable Retention: Local buffering with configurable limits

2. LogFlux Backend - Hosted Storage and Processing

The LogFlux Backend is fully managed by LogFlux and handles encrypted log storage, indexing, and retrieval. All data remains encrypted at rest - only your clients can decrypt it.

Ingestor Service

- High-Performance Ingestion: Handles thousands of encrypted log entries per second

- Multi-tenant Isolation: Complete data separation between customers

- API Key Authentication: Secure, simple authentication without tokens

- Geographic Distribution: Available in multiple regions for data residency

- Rate Limiting and DDoS Protection: Enterprise-grade infrastructure protection

Backend Service

- Encrypted Storage: Logs stored encrypted - we cannot read your data

- Fast Indexing: Efficient search capabilities on encrypted metadata

- Retention Management: Automatic data lifecycle management

- Query Engine: High-performance log retrieval and filtering

- Multi-region Support: Choose your preferred data residency region

Benefits of Managed Backend

- Zero Maintenance: We handle all infrastructure, scaling, and operations

- High Availability: Built-in redundancy with 99.9% uptime SLA

- Compliance Ready: SOC 2, GDPR compliant with data residency options

- Scalable: Automatically scales with your log volume

- Cost-effective: Pay only for what you use with transparent pricing

3. LogFlux Clients - Analysis and Monitoring Tools

LogFlux Clients run in your environment and provide various interfaces for searching, analyzing, and monitoring your logs. All decryption happens locally on your systems - LogFlux never has access to your plaintext logs.

Desktop GUI Application

- Native Desktop App: Available for Windows, macOS, and Linux

- Visual Analytics: Charts, graphs, and dashboards for log analysis

- Advanced Filtering: Point-and-click filter creation and saved searches

- Real-time Monitoring: Live log streaming with customizable alerts

- Export Capabilities: Export logs and reports in multiple formats

Command Line Interface (CLI)

- Powerful CLI Tool: Perfect for automation, scripting, and DevOps workflows

- Complex Queries: Advanced search with filters, time ranges, and regex

- Integration Ready: Easy integration with existing tools and pipelines

- Batch Operations: Bulk log analysis and processing capabilities

|

|

Terminal User Interface (TUI)

- Interactive Terminal UI: Rich terminal interface with live updates

- Keyboard-driven Navigation: Efficient workflow without leaving the terminal

- Split Views: Monitor multiple log streams simultaneously

- Real-time Tail Mode: Live log streaming with highlighting and filtering

Development Plugins

- Grafana Plugin: Native LogFlux data source for Grafana dashboards

- VS Code Extension: Log inspection and analysis directly in your IDE

- Custom Integrations: REST API for building custom analysis tools

Data Flow

Understanding how data flows through LogFlux helps in optimizing your logging strategy:

- Log Generation: Your applications and infrastructure generate log entries

- Collection: The LogFlux Agent captures logs using plugins or SDKs

- Client-side Encryption: Logs are encrypted with AES-256-GCM in your environment

- Secure Transmission: Encrypted logs are sent to the LogFlux Backend over TLS

- Encrypted Storage: The Backend stores logs without being able to decrypt them

- Retrieval: LogFlux Clients query and retrieve encrypted logs from the Backend

- Client-side Decryption: Clients decrypt logs locally for analysis and viewing

- Analysis: Use Client tools to search, filter, and analyze your decrypted logs

Zero-Knowledge Architecture

LogFlux follows a zero-knowledge architecture where:

- Encryption keys never leave your environment

- LogFlux cannot decrypt your log data

- Only your clients can read your logs

- End-to-end encryption from agent to client

Security Architecture

LogFlux implements a zero-knowledge, end-to-end encryption architecture designed to ensure maximum security and privacy:

Zero-Knowledge Architecture

- Client-side Encryption: All logs encrypted with AES-256-GCM in your environment before transmission

- Private Key Management: Encryption keys never leave your systems

- No Plaintext Access: LogFlux cannot decrypt or read your log data

- End-to-End Security: Data remains encrypted from agent to client

Authentication & Authorization

- API Key Authentication: Unique keys for each application with HMAC signatures

- Scoped Access: Keys tied to specific customers and applications

- Personal Access Tokens (PATs): Secure tokens for client authentication

- Multi-tenant Isolation: Complete logical and cryptographic separation between customers

Encryption Implementation

Agent-Side Encryption

- AES-256-GCM: Industry-standard authenticated encryption

- Unique Keys: Separate encryption keys per application

- Key Derivation: Secure key derivation from customer secrets

- Authenticated Data: Encryption includes authentication tags to prevent tampering

Client-Side Decryption

- Local Decryption: All decryption happens on your systems

- Key Management: Clients manage their own decryption keys

- Secure Storage: Keys stored securely on client systems

- No Key Transmission: Keys never sent over the network

Data Protection

- Encryption in Transit: TLS 1.3 for all API communications

- Encrypted at Rest: All stored logs remain encrypted with customer keys

- Data Isolation: Complete cryptographic separation between customers

- Customer-Specific URLs: Dedicated subdomains for enhanced isolation

- Access Logging: Comprehensive audit trail of all encrypted data access

Compliance & Privacy

- Zero-Knowledge Provider: We cannot access your log content

- Data Residency: Choose between EU, US, and other regions

- GDPR Compliant: Right to deletion, data portability, and privacy by design

- SOC 2 Type II: Enterprise security controls and audit compliance

- HIPAA Compatible: Suitable for healthcare and sensitive data logging

- Data Sovereignty: Your keys, your data, your control

Scalability & Performance

LogFlux is built to scale with your needs:

Horizontal Scaling

- Collectors: Deploy as many collectors as needed

- Ingestors: Automatically scale based on load

- Storage: Virtually unlimited log storage

Performance Optimization

- Compression: Logs are compressed for efficient storage

- Indexing: Smart indexing for sub-second search results

- Caching: Intelligent caching for frequently accessed data

- Load Balancing: Automatic distribution across multiple servers

Best Practices

Agent Deployment

- Choose the Right Integration: Use plugins for infrastructure, SDKs for applications

- Secure Key Management: Store encryption keys securely and rotate them regularly

- Configure Local Buffering: Size buffers appropriately for your log volume

- Monitor Agent Health: Track metrics like encryption performance and transmission rates

- Use Structured Logging: JSON format enables better filtering and analysis

Security Best Practices

- Key Rotation: Regularly rotate encryption keys and API keys

- Least Privilege: Use separate API keys per application with minimal required permissions

- Secure Key Storage: Store encryption secrets in secure key management systems

- Network Security: Use private networks and firewalls to protect agent communications

- Audit Access: Regularly review who has access to encryption keys and client tools

Client Usage

- Secure Client Deployment: Deploy clients in secure environments with proper access controls

- Local Key Storage: Use secure storage for decryption keys (OS keychain, vault systems)

- Save Common Queries: Create saved searches for frequent investigations

- Time-based Filtering: Use time ranges to improve query performance

- Export Compliance Data: Export critical logs for regulatory compliance and auditing

Performance Optimization

- Batch Log Transmission: Configure appropriate batch sizes for your network

- Compression: Enable compression for large log volumes

- Regional Deployment: Choose backend regions close to your infrastructure

- Indexing Strategy: Structure logs to optimize search performance

- Client Caching: Use client-side caching for frequently accessed log data

Getting Started

Ready to implement LogFlux’s zero-knowledge logging in your infrastructure?

- Create an Account: Get your API keys and set up encryption

- Deploy LogFlux Agent: Install the agent with plugins or SDKs

- Configure Encryption: Set up client-side encryption keys

- Install LogFlux Clients: Deploy clients for log analysis

Next Steps

- API Reference: Detailed API documentation for agent and client integration

- SDKs Documentation: Language-specific guides for custom application integration

- Security Best Practices: Secure your zero-knowledge logging infrastructure